Let’s talk about Bayesian Learning.

Bayesian vs Frequentist Approach

First of all, what is meant by ‘Bayesian’ probability? How is it different from the ‘vanilla’ probability that we all studied in 9th grade?

Frequentist Approach

The ‘vanilla’ probability is actually called the Frequentist Approach. We usually calculate probability by drawing a sample from a population. The frequentist approach is about finding probabilities related to this sample and nothing more.

For example, say you have drawn a sample of 100 apples out of which 5 of them are rotten. The probability of picking a rotten apple from the sample then becomes 0.05 or 5%.

The target of such exercises are usually to find the answers to bigger questions like “What is the probability of an apple from a certain distribution center being rotten / substandard?” or “If we decide to source apples from a certain distributor, how many of them might be rotten / substandard?”

Owing to their cost and cumbersomeness, we usually cannot answer these questions by considering ALL the apples from the distribution center. Thus, we take a sample from the population, say 100 apples.

We also know that according to the Central Limit Theorem, the probability derived from a sample approaches the ‘true’ probability of a population as the sample size gets larger.

Thus, in the frequentist approach, we find the sample probability of an event and let it go at that.

Bayesian Approach

The limitation with the frequentist approach is that it provides no avenues for incorporating context or prior beliefs into the calculations.

For example, in the apple problem that we considered in the frequentist approach, suppose we had prior information that 7% of apples from the distribution center are substandard. Maybe this is a claim by other vendors who sourced apples from that distribution center or maybe it was the result of an old study. This is what we call a prior belief.

In the frequentist approach, prior beliefs have no place. Whereas in the Bayesian approach, the prior belief is considered in the calculations.

The Bayesian approach is based on the real world situation in which nothing exists in a vacuum. The same applies to probability. Prior beliefs provide what we might call as context for a certain problem. This additional information is pivotal in finding more accurate probabilities about a population when considering its sample. This is because prior beliefs exist outside the sample constraints.

Comparison

Both the Frequentist and Bayesian approaches have their own merits and it is not like one is superior to the other. It is just a matter of which one to use in a given scenario.

For most machine learning purposes, we tend to use the Bayesian approach. One major limitation of using the Bayesian approach is that we need not always have the prior beliefs or prior probabilities.

It should be considered that the rest of this post is written for understanding Bayesian thinking and as such uses Bayesian terminologies and thought process.

Mathematical Preliminaries for Bayes Theorem

Before we look at Bayes’ Theorem, let’s quickly go through some foundational concepts.

This video is a very useful reference in understanding derivations and such about the below concepts.

Conditional Probability

Suppose that there are 10 cubes and 15 balls in a bag. Among the cubes, 4 are white and 6 are black in color. Among the balls, 5 are white and 5 are black in color.

Now let’s pick an item from the bag. Given that we have picked a cube, what is the probability that it is white? I.e. we need to find P(white | cube).

This is a Conditional probability question.

Let A be the event of picking a white object and B be the event of picking a cube. Note that A and B are dependent events here. I.e. If we know that either has occurred, it also impacts the probability of the other.

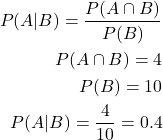

Thus,

This is most easily proved through numbers as shown above. Generally, this is treated as an axiom of probability.

Product Rule

We can rewrite the formula for conditional probability as below.

![]()

This is called the Product Rule.

Sum Rule

When we found the probability of B (probability of picking a cube), we considered both the white and black cubes. In essence, this is the Sum Rule.

P(cube) = P(white cube) + P(black cube)

![]()

Bayes Theorem

We can combine the equations of conditional probability, product rule and the sum rule to get the equation of Bayes Theorem.

![]()

Let’s now link this to our initial explanation of the Bayesian approach:

- P(A) and P(B) are called the prior probability or marginal probability.

- P(B | A) is called the likelihood of B given A.

- P(A | B) is called the posterior probability. It is the probability of A given the evidence B.

Keep this in mind as these terms will be used later in this post.

Bayesian Learning

In simple words, it is the process of learning by using the Bayes’ Theorem.

Features of Bayesian Learning

Let’s look at some features of Bayesian learning.

- Every training example in the dataset can incrementally increase or decrease the estimated probability that a hypothesis is correct.

- We can combine prior knowledge and the given dataset to arrive at the final probability of a hypothesis.

- We can create probability-based outcomes rather than predictions that exclude all other hypotheses except one. For example, given a picture of a car, we can get outputs like there is a 80% probability that it is a Tesla and 60% probability that it is a Ford. In this way, we know the probabilities of the competing hypotheses.

- It helps in optimal decision-making despite the computational intensity.

Limitations of Bayesian Learning

There are two main limitations to the Bayesian learning method:

- It is computationally expensive. This is because we need to calculate the probability for each hypothesis in the set. The complexity increases linearly with the number of candidate hypotheses.

- It is not always practical to have the prior probabilities for every scenario.

Point Estimators for Bayesian Learning

MAP Hypothesis

When evaluating the probabilities of an event, we will consider a hypothesis space that contains multiple hypotheses h. Let’s call this hypothesis space as H.

This space can be as simple as concluding whether the climate is windy, cloudy or sunny. Here, the hypothesis space is said to have the respective hypotheses as windy, cloudy or sunny. It just means that these are the outcomes that we can expect for the associated classification task.

Suppose we have a dataset D that has temperature, humidity and other weather-related data. Our task is to predict whether a particular day is windy, cloudy or sunny based on the dataframe attributes.

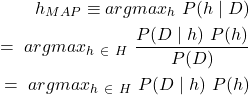

MAP Hypothesis or Maximum A Posteriori Hypothesis may be defined, in layman terms, as the probability for the hypothesis in the hypothesis space that has the maximum possibility of occurrence given the dataset.

We can drop the term P(D) as it is independent of any hypothesis h.

A brute force approach to calculate ![]() is to calculate the probabilities

is to calculate the probabilities ![]() for all hypotheses h and then pick the hypothesis with maximum probability.

for all hypotheses h and then pick the hypothesis with maximum probability.

MLE Hypothesis

MLE or Maximum Likelihood Estimate is a simplified form of the MAP hypothesis. Here, we only take the maximum of the likelihood among the possible hypotheses h in the hypothesis space H. The difference with MAP is that we ignore the prior probability.

![]()

MAP vs. MLE Hypothesis

- Both MAP and MLE hypotheses are point estimators. It should be noted that Bayesian inference calculates the probability distribution of the given function while MAP and MLE will give a single value.

- The MAP hypothesis maximizes the posterior probability (P(h|D)) whereas MLE maximizes the likelihood (P(D|h)).

- We can use the MAP hypothesis when we have prior probabilities and MLE when we do not have them. Each has its usage depending on the situation.