Topic Overview

- Find the Bottleneck SQL Query from the ETL Session

- Verify Data Volumes And Check for Spikes

- Find the SQL_ID of the SQL Query

- Check the SQL Query Statistics Using the SQL ID

- Find the Query Execution Plans Used by the SQL Query

- Analyze the Query Execution Plans to Find Expensive Operations

- Check if the Table-Level Statistics are Stale

- Manually Gather Statistics for the Partition and/or Table

- Finding Indexes & Partitions Defined on the Table

- Check for Better Access Paths

- Find SQL Profiles & Plan Baselines of the SQL Query Using SQL_ID

- Look at Table Archival Options

- Look at Query Rewrite Possibility

A Step-by-Step Guide for SQL Tuning for Beginners

Introduction

This is a practical guide for data engineers to tune troublesome queries. While the Oracle DBA is more suited to handle these things, data engineers end up having to do this more often than one might think.

In case you are new to SQL tuning altogether, I would suggest you check out the Black-boxing technique for best results. It explains how you can complete a hitherto unfamiliar technical task with the minimal amount of information.

This blog post is based on tuning in Oracle Database and Informatica ETL software.

Without further ado, let’s get started.

This post provides a structured direction to conduct your analysis. It does not give all details necessary for performance tuning. This is because performance tuning is a vast topic that requires specialized knowledge and decent experience with SQL.

One cannot expect to learn all of that from a single blog post. This post is about giving you the awareness necessary to navigate the tuning process. You may have to learn one or more of these topics in detail independently to actually perform the tuning.

Nonetheless, this will be enough to get you started down the rabbit hole.

Step 1: Find the Bottleneck SQL Query from the ETL Session

Let’s start with the basics. Query tuning usually starts when some ETL sessions or batch jobs slow down and affect SLAs. First, we need to find the SQL Query that’s causing the performance bottleneck.

The instructions throughout this article will be regarding tuning an existing Production query whose performance has deteriorated. I will deal with SQL Tuning techniques that can be used during the Development Phase in another post.

Check the logs to find the SQL query that is causing an issue. Different ETL tools have different ways for this.

- In Informatica, you can check the busy % to find the costly transformation and then move from there to the query.

- In ODI, you can look at the time spent per step of a procedure to find the exact bottleneck query.

- In general, you are looking for the place where the ETL session spends more time.

Once you have the SQL Query causing the performance bottleneck, move on to Step-2.

Step 2: Verify Data Volumes And Check for Spikes

Sometimes, it is the volume that causes the problem. Find out whether that’s the case.

If the query normally took 15 mins for processing 10 million records & it is now taking 45 mins for processing 50 million records, that’s not really a performance drop. In cases like this, the analysis should centre more around the increase in data volume.

If the increase in data volume is valid, then try to see if you can do some improvements regarding query performance (start from Step-3). In case that’s not possible, consider revising the job completion expectations (SLAs) as well.

If there is no appreciable spike in data volume (i.e. nothing unprecedented or noticeable), then move on to Step-3.

Step 3: Find the SQL_ID of the SQL Query

From this step onwards, we will be using the DBA_% views in Oracle. These are called Static Dictionary Views. They store run-time information of the queries, details about objects and many more things in Oracle. You can learn more about them here.

For simplicity sake, I am only including the relevant information & queries that you require for your purpose.

Your query will have a unique ID in Oracle. This SQL_ID is what will be used to identify the query across the Static Data Dictionary Views. The view that can help here is DBA_HIST_SQLTEXT 1.

You can use the below simple query to find the SQL_ID using the SQL Query:

SELECT * FROM DBA_HIST_SQLTEXT WHERE SQL_TEXT like ‘%<Part of Your SQL Query>%’;

Example:

SELECT * FROM DBA_HIST_SQLTEXT WHERE SQL_TEXT like ‘UPDATE EDW_CUST_SALES_FACT SET SALES_REP_DIM_ID%’;

The SQL_ID will look something like this – ‘5vdvxd34vgdca’.

Now we move to Step-4.

Step 4: Check the SQL Query Statistics Using the SQL_ID

Next, we look at the SQL Query’s historical statistics in the DBA_HIST% views. With this, we can find the various important processing parameters of the query. We can do this using the SQL_ID & the DBA_HIST_SQLSTAT2 view.

SELECT

TO_CHAR(SNP.BEGIN_INTERVAL_TIME,’DD-MON-YY HH24:MI’) AS “Plan Active Hour”,

STAT.PLAN_HASH_VALUE AS “PLAN_HASH_VALUE”,

STAT.EXECUTIONS_DELTA AS “Executions per Hour”,

STAT.DISK_READS_DELTA AS “Disk Reads per Hour”,

STAT.BUFFER_GETS_DELTA AS “Buffer Gets per Hour”,

STAT.ROWS_PROCESSED_DELTA AS “Rows Processed per Hour”,

STAT.CPU_TIME_DELTA AS “CPU Seconds per Hour”,

STAT.IOWAIT_DELTA AS “I/O Wait Seconds per Hour”,

STAT.ELAPSED_TIME_DELTA AS “Elapsed Seconds per Hour”

FROM

DBA_HIST_SQLSTAT STAT,

DBA_HIST_SNAPSHOT SNP

WHERE

SNP.SNAP_ID = STAT.SNAP_ID

and

sql_id = ‘5vdgcvd34vgdca’

ORDER BY SNP.BEGIN_INTERVAL_TIME desc;

Look for any relative spikes or abnormal values in the columns 4 to 9. While you may be able to spot relative spikes easily, finding abnormal values will be slightly tricky. As a shortcut, you correlate the numbers with the that of other normal queries in your database that uses similar tables.

If you find any, then you know why you have the slowness. It then needs to be drilled further down from this point.

Drilling down from here requires some understanding of the way in which databases work. I will try to enhance this post or write another one if you are interested to know this. Let me know in the comments section.

In case you have identified a spike here and do not know how to proceed, connect with the Oracle DBA to help you go further with this.

Step 5: Find the Query Execution Plans Used by the SQL Query

In this step, we will look at the various execution plans used by the same query on different days.

We can get this from the DBA_HIST_SQL_PLAN table:

SELECT * FROM DBA_HIST_SQL_PLAN WHERE SQL_ID = ‘5vhgdscvgdcvgca’;

Here is another post on the EXPLAIN PLAN command as well.

Step 6: Analyze the Query Execution Plans to Find Expensive Operations

General query analysis rules need to be applied here. The main question here is to identify the operations that are more expensive.

For instance, the below two are expensive:

- Sort operations

- Full table access

- Aggregations

Now you have to find the most expensive part of your query from the plan.

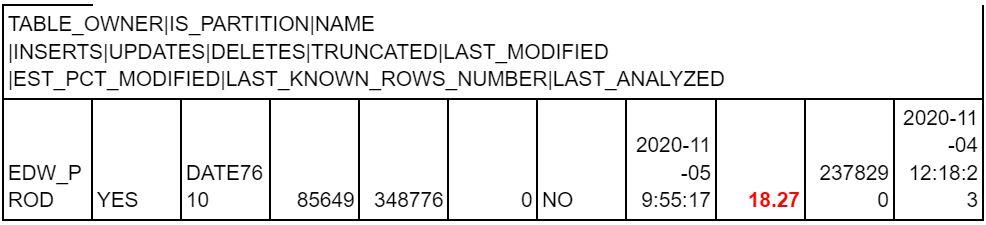

Step 7: Check if the Table-Level Statistics are Stale

The below query can help to find if the statistics on the table or its partitions are stale.

SELECT

A.PARTITION_NAME,

INSERTS+UPDATES+DELETES ALL_CHANGES,

NUM_ROWS,

LAST_ANALYZED,

TIMESTAMP,

CASE WHEN NUM_ROWS = 0 THEN NULL ELSE ROUND((((INSERTS+UPDATES+DELETES) / NUM_ROWS) * 100),2) END CHANGE_PERCENTAGE

FROM DBA_TAB_MODIFICATIONS A JOIN DBA_TABLES B ON A.TABLE_NAME = B.TABLE_NAME

WHERE A.TABLE_NAME = ‘EDW_CUST_SALES_FACT’ AND (((INSERTS+UPDATES+DELETES) / NULLIF(NUM_ROWS,0)) * 100) > 10;

The query will fetch multiple records if there are partitions on the table. The WHERE clause has been added with a filter to get only those results that has caused more than 10% change in record count.

If the table is partitioned, it could be that the active partition is the one that is stale and not necessarily the table. To find that out, we used the below query to find stale partitions:

SELECT

A.TABLE_OWNER,

A.PARTITION_NAME,

A.TRUNCATED,

A.TIMESTAMP as LAST_MODIFIED,

ROUND((((INSERTS+UPDATES+DELETES) / NUM_ROWS) * 100),2) AS CHANGE_PERCENTAGE,

P.NUM_ROWS AS RECS_AS_PER_STATS,

P.LAST_ANALYZED AS STATS_GATHERED_TIME

FROM DBA_TAB_MODIFICATIONS A, DBA_TAB_PARTITIONS P

WHERE A.TABLE_OWNER = P.TABLE_OWNER

AND A.TABLE_NAME = P.TABLE_NAME

AND A.PARTITION_NAME = P.PARTITION_NAME

and (((INSERTS+UPDATES+DELETES) / NULLIF(NUM_ROWS,0)) * 100) > 10

and P.TABLE_NAME = ‘EDW_CUST_SALES_FACT’;

Usually, automated stat gathering happens overnight & this can often be the problem.

Step 8: Manually Gather Statistics for the Partition and/or Table

If the statistics are stale, we need to gather the stats for them. We can do that by the below.

Gather Stats on Partition:

exec dbms_stats.gather_table_stats(ownname=>’EDW_SALES’,tabname=>’EDW_CUST_SALES_FACT’,partname=>’DATEKEY3000′,no_invalidate=>false,estimate_percent=>dbms_stats.auto_sample_size,cascade=>true,degree=>4);

Gather Stats on Table:

exec dbms_stats.gather_table_stats(ownname=>’EDW_SALES’,tabname=>’EDW_CUST_SALES_FACT’,no_invalidate=>false,estimate_percent=>dbms_stats.auto_sample_size,cascade=>true,degree=>4);

If this doesn’t work, move on to the next step.

Step 9: Find the Indexes & Partitions Defined on the Table

The below query can help to find the name & type of partitions defined on a table:

SELECT * FROM ALL_PART_TABLES WHERE TABLE_NAME=’EDW_CUST_SALES_FACT’;

The below query can help to find the partition values created on a table:

SELECT * FROM ALL_TAB_PARTITIONS WHERE TABLE_NAME=’EDW_CUST_SALES_FACT’ ORDER BY PARTITION_POSITION DESC;

The below query can help to find the name & type of indexes defined on a table:

SELECT * FROM ALL_INDEXES WHERE TABLE_NAME = ‘EDW_CUST_SALES_FACT’;

The below query can help to find the columns on which indexes are defined on a table:

SELECT * FROM ALL_IND_COLUMNS WHERE TABLE_NAME = ‘EDW_CUST_SALES_FACT’;

With this, we found the partitions & indexes defined on the table. We use this information at the subsequent stages of the analysis.

Step 10: Check for Better Access Paths

Here, you need understanding of access paths, how to choose an access path and experiment with them to find an optimal path. This link can help.

Also, request Oracle DBA to run the tuning advisor. This might provide more suggestions. Try them out as well. But sometimes, this comes up empty.

If this doesn’t work, move on to the next step.

Step 11: Find SQL Profiles & Plan Baselines of the SQL Query Using SQL_ID

Find SQL Profile/s linked to the SQL_ID using the below query:

select distinct

p.name sql_profile_name,

s.sql_id

from

dba_sql_profiles p,

DBA_HIST_SQLSTAT s

where

p.name=s.sql_profile and SQL_ID = ‘1razjsq42vfh9’;

Find the details of the profile (if interested) using below query:

SELECT * FROM DBA_SQL_PROFILES WHERE NAME=’SYS_SQLPROF_63265463215634321′;

Deciphering the profile is complicated, so you can skip it.

Find the SQL Baseline used by the query using below query:

SELECT * FROM DBA_SQL_PLAN_BASELINES WHERE SQL_TEXT like ‘UPDATE SNPSHT_SALES_FACT SET SALES_EQUIP_DIM_ID%’;

Sometimes, it might be that there is a more efficient plan available but the optimizer picks up the inefficient one. Here, our desired course of action is to have the query pick the more efficient plan.

Many times, it’s just a matter of changing the baselined query.

Disable the sql profile 3 and baseline for the SQL ID: 3gdvxbdcdvcd for having planhash value – 1853645635.

Plan Disabling

Profile diable/drop4:

To disable the profile, which means they will not any longer be considered by the CBO until you once again accept them:

set serveroutput on

begin

dbms_sqltune.alter_sql_profile(name=>’SYS_SQLPROF_0146af25274a0117′, attribute_name=>’STATUS’,value=>’DISABLED’);

end;

/

To completely drop the profile:

set serveroutput on

begin

dbms_sqltune.drop_sql_profile(name=>’SYS_SQLPROF_0146af25274a0117′);

end;

/

Step 12: Look at Table Archival Options

Sometimes the tables involved are too big & without proper archival mechanisms. In this case, the data build-up since the past must be what caused this. This indicates a design issue. Nonetheless, this is one of the last options to look into.

Step 13: Look at Query Rewrite Possibility

This is a risky approach, esp. In legacy systems. Why? Because it involves reverse engineering query to business rules & then reconverting them to query to get the same data set. Proper back-testing is required.

This is the last option that I would suggest. But it really depends on the specific situation.