In this post, we will delve into the memory organization in the computer. We will focus on the clarity of fundamentals in this one and go into more advanced topics in a future post.

Topic Overview

- What does Memory do?

- Memory Hierarchy

- Semiconductor Memory

- Moore’s Law

- Understanding SRAM

- Understanding DRAM

- What is ‘Refresh’?

- Disk Storage

- Solid State Drives (SSDs)

- Disk Attachment

- Storage Arrangement

Data Engineering for Beginners – Memory Organization

What does Memory do?

When the CPU wants to process data, we need to retrieve it from somewhere where it is stored. The different types of memory structures are used for this.

For instance, most of the data will reside in a disk. When something is required, the OS scans the disk to find the data and loads it into the Main Memory. This data might or might not get loaded into the cache. The CPU will then fetch the data from the Main Memory or cache, whichever is faster.

Broadly speaking, the fastest memory is the cache, then the Main Memory and the secondary storage devices like Hard Disk.

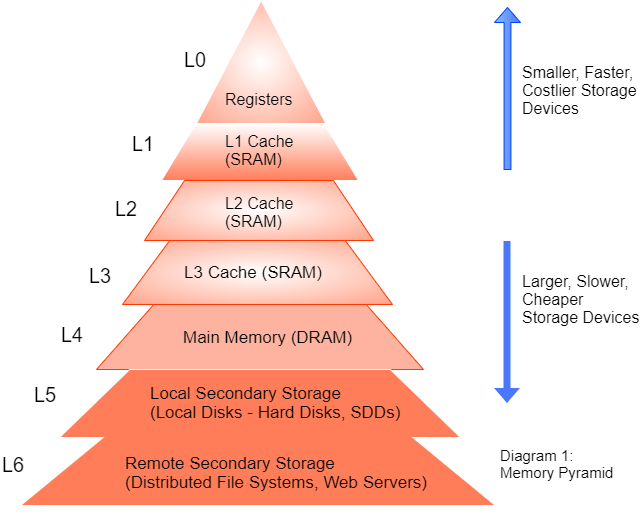

Memory Hierarchy

The below diagram shows the memory hierarchy pyramid.

- L0 means CPU Registers that hold words (data/instructions) that are retrieved from the cache. This is closest to the CPU and the fastest to access.

- L1 cache holds cache lines (data/instructions) retrieved from L2 cache.

- L2 cache holds cache lines (data/instructions) retrieved from L3 cache.

- L3 cache holds cache lines (data/instructions) retrieved from Main Memory.

- Main Memory holds disk blocks (data/instructions) retrieved from local disks.

- Local Disks hold files (data/instructions) retrieved from disks on remote network servers.

Semiconductor Memory

Semiconductor memory refers to digital memory devices made of semiconductor materials that store data as electrical charges or states in transistors. The two main types of semiconductor memory are volatile memory (such as Dynamic Random Access Memory (DRAM)) and non-volatile memory (such as flash memory and Read-Only Memory (ROM)).

DRAM requires constant power to maintain stored data, while non-volatile memory retains data even when power is turned off. Semiconductor memory has replaced older forms of memory, such as magnetic core memory and vacuum tube memory, due to its much higher density, lower cost, and faster access time.

Here’s more information about the different types of semiconductor memory:

01

DRAM

Dynamic Random Access Memory (DRAM): This is a type of volatile memory that stores each bit of data in a separate capacitor within a memory cell. The charge in the capacitor must be refreshed every few milliseconds to avoid losing the data. DRAM is widely used for computer main memory and cache.

02

SRAM

Static Random Access Memory (SRAM): This is also a type of volatile memory that stores each bit of data in a flip-flop circuit. Unlike DRAM, SRAM does not need to be refreshed and is faster and more expensive than DRAM. SRAM is often used as cache memory in computers.

03

ROM

Read-Only Memory (ROM): This is a type of non-volatile memory that stores data permanently and cannot be altered. ROM is used to store firmware and other essential software that must be present and unchanged when a computer starts.

04

Flash Memory

Flash Memory: This is a type of non-volatile memory that can be reprogrammed and has higher storage density and lower cost compared to other types of non-volatile memory. Flash memory is commonly used in USB drives, digital cameras, and solid-state drives (SSDs).

05

EEPROM

Electrically Erasable Programmable Read-Only Memory (EEPROM): This is a type of non-volatile memory that can be reprogrammed and is used to store configuration settings and other data that may change during the lifetime of a device.

In summary, different types of semiconductor memory have different characteristics, such as cost, speed, and non-volatility, and are used in various applications depending on their strengths.

Moore’s Law

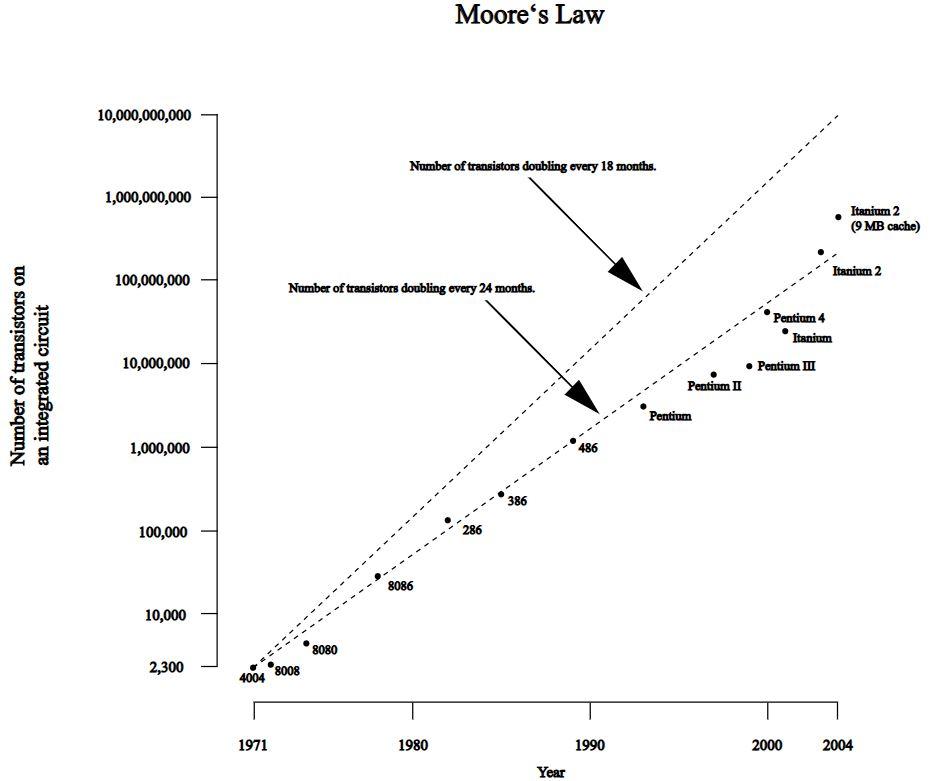

Moore’s law is an observation made by Gordon Moore, co-founder of Intel Corporation, in 1965 that the number of transistors on an integrated circuit (IC) doubles approximately every two years, leading to a corresponding increase in computing power and decrease in cost per transistor. This exponential growth in the number of transistors and the resulting improvements in computing performance have driven much of the growth of the computing industry.

Here’s a graph that illustrates the trend described by Moore’s law:

As the graph shows, the number of transistors on a chip has increased rapidly over time, leading to corresponding improvements in computing performance and reductions in cost. The trend described by Moore’s law has continued for more than 50 years and has had a major impact on the development of the computing industry.

It’s important to note that the continued exponential growth predicted by Moore’s law is becoming increasingly challenging due to physical limitations, such as the difficulty of producing transistors with smaller feature sizes, and the increasing power consumption of computer chips. Nevertheless, the trend described by Moore’s law has been a driving force in the development of the computing industry and has had a profound impact on our world.

Understanding SRAM

Static Random Access Memory (SRAM) is a type of volatile memory that is commonly used in computer systems as cache memory. It stores each bit of data in a flip-flop circuit, which retains its state even when power is turned off.

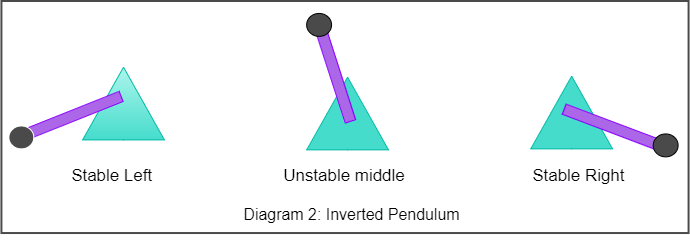

It stores each bit in a bi-stable memory cell. As explained beautifully by Gregory Stallings, the concept is analogous to the inverted pendulum.

What that means is that if you look at the diagram above, you can see that the apparatus is stable only when it is at rest (either stable left or right). In any other position, it is unstable and will fall to either one of the positions.

Due to its bi-stable nature, an SRAM memory cell will retain its value indefinitely, as long as it is kept powered. Even if there is a disturbance like an electrical noise, the circuit will return to the stable value when the disturbance is removed.

In comparison to other types of memory, such as Dynamic Random Access Memory (DRAM), SRAM is faster and more reliable because it does not need to be refreshed like DRAM. However, SRAM is also more expensive and requires more transistors per bit of memory, which makes it less dense than other types of memory.

The main advantage of SRAM over DRAM is its speed, as SRAM does not need to be refreshed and can access stored data much faster than DRAM. This makes SRAM an ideal choice for high-speed cache memory, where fast access to data is critical.

Overall, SRAM is an important type of memory that is widely used in computer systems due to its high speed and reliability. However, its high cost and lower density compared to other types of memory limit its use to specific applications where speed is critical.

Understanding DRAM

Dynamic Random Access Memory (DRAM) is a type of volatile memory that is widely used in computer systems as main memory and cache. It stores each bit of data in a separate capacitor within a memory cell. The charge in the capacitor must be periodically refreshed to maintain the stored data, as the charge in the capacitor tends to leak over time.

Unlike SRAM, a DRAM memory cell is very sensitive to any disturbance. When the capacitor voltage is disturbed, it will never recover. Exposure to light rays will cause the capacitor voltages to change.

The refresh process involves reading the data stored in the memory and then rewriting it to the same location. This constant refreshing of the data in DRAM is necessary to maintain the stored data, but it also increases the access time for the memory and reduces the memory’s overall performance.

DRAM is less expensive and has a higher storage density compared to other types of memory, such as Static Random Access Memory (SRAM). However, its slower performance and need for refresh make it less suitable for applications where speed is critical, such as high-speed cache memory.

Overall, DRAM is an important type of memory that is widely used in computer systems due to its high storage density and low cost. Its slower performance compared to SRAM and the need for refresh limit its use to specific applications where high storage density and low cost are more important than speed.

What is ‘Refresh’?

In the context of computer memory, “refreshed” refers to the process of maintaining the stored data in Dynamic Random Access Memory (DRAM).

DRAM stores each bit of data in a separate capacitor within a memory cell. The charge in the capacitor must be periodically refreshed to maintain the stored data, as the charge in the capacitor tends to leak over time. The refresh process involves reading the data stored in the memory and then rewriting it to the same location.

Refreshing the data in DRAM is necessary to maintain the stored data, but it also increases the access time for the memory and reduces the memory’s overall performance. This is why DRAM is generally slower than Static Random Access Memory (SRAM), which does not need to be refreshed and can access stored data much faster.

In summary, the term “refreshed” refers to the process of maintaining the stored data in DRAM by periodically reading and rewriting it, which is necessary to prevent data loss due to leakage of the charge stored in the memory’s capacitors.

Disk Storage

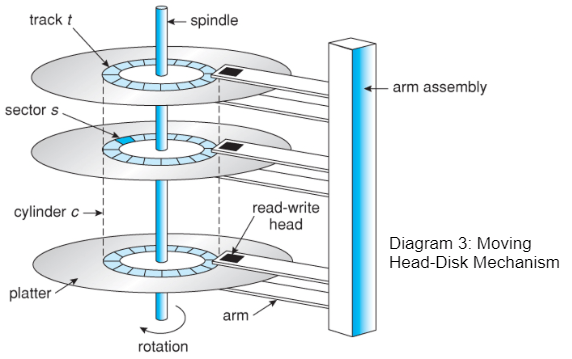

Disk storage, also known as hard disk drive (HDD) or magnetic disk storage, is a type of non-volatile storage that uses rotating disks coated with magnetic material to store data. The disks are attached to a spindle and spin at high speeds, typically between 5400 RPM and 7200 RPM.

Data is stored on the disks in the form of magnetic patterns representing binary information (0s and 1s). The magnetic patterns are read and written to the disks by a read/write head, which is attached to a moving arm and positioned over the disk surface.

The read/write head reads the magnetic patterns on the disk surface by detecting the magnetic field produced by the patterns. To write data to the disk, the read/write head applies a magnetic field to the disk surface to change the magnetic patterns and encode the binary information.

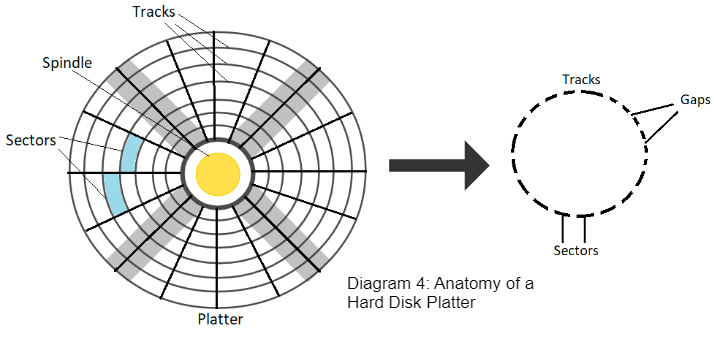

Data is stored on the disk in concentric circles called tracks. The tracks are divided into smaller sections called sectors, which contain the actual data stored on the disk. The disk controller manages the reading and writing of data to the disk by controlling the position of the read/write head and the movement of the disk.

The below are steps involved in accessing data on a hard disk:

- The computer system sends a request to access data stored on the hard disk.

- The operating system locates the requested data on the disk using file system data structures such as file allocation table (FAT) or master file table (MFT).

- The disk controller retrieves the data from the disk platters using the read/write head.

- The data is temporarily stored in a cache or buffer.

- The disk controller sends the data to the system’s memory or CPU.

- The CPU processes the data as per the request.

- The results are then stored back to the hard disk or displayed on the screen, as required.

In summary, disk storage uses rotating disks coated with magnetic material to store data in the form of magnetic patterns. The data is read and written to the disk by a read/write head that detects and changes the magnetic patterns on the disk surface. The disk controller manages the reading and writing of data to the disk by controlling the position of the read/write head and the movement of the disk.

Solid State Drives (SSDs)

Flash Memory

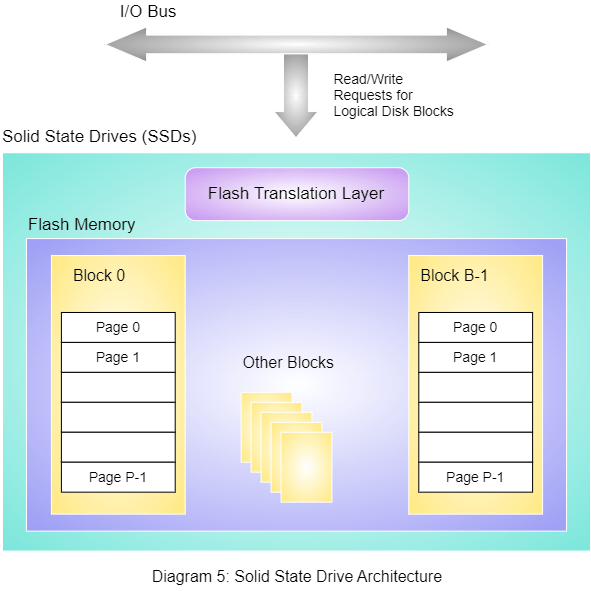

Flash memory is a type of non-volatile memory that stores data by trapping electrons in a grid of floating gates on a silicon chip. Unlike volatile memory (such as RAM), flash memory retains its stored data even when the power is turned off.

A flash memory chip is a tiny piece of silicon that contains the flash memory and is used in a variety of devices, including SSDs, USB flash drives, memory cards, and smartphones. Flash memory chips come in various sizes and capacities, and they can be combined to make larger storage devices.

Flash memory chips are fast and durable, and they are ideal for use in devices that require fast access to data, low power consumption, and compact form factor. Additionally, flash memory can be written and erased many times, making it ideal for use in devices that require frequent updates, such as smartphones and digital cameras.

How do SSDs Work?

A Solid State Drive (SSD) is a type of data storage device that uses NAND-based flash memory to store data persistently. Unlike traditional hard drives that have spinning disks, SSDs have no moving parts, making them faster, quieter, and more durable.

In simple terms, an SSD works by using interconnected flash memory chips to store data. When a user saves a file to an SSD, the data is broken down into small chunks and stored in these flash memory chips. When the user wants to access the data, the SSD retrieves the data from the flash memory chips and sends it to the computer’s processor.

Since SSDs have no moving parts, they can access data much faster than traditional hard drives, making them ideal for tasks that require quick access to data, such as booting up an operating system or loading large files. Additionally, since SSDs are more durable than traditional hard drives, they are less likely to fail due to physical shock or wear and tear.

Performance Characteristics: HDDs vs. SDDs

Here are some key performance characteristics that differentiate Solid State Drives (SSDs) from Hard Disk Drives (HDDs):

Speed: SSDs are much faster than HDDs in terms of data access speed. SSDs can access data almost instantly, while HDDs can take several milliseconds to access data due to their mechanical nature.

Sequential Read/Write Speed: SSDs have much higher sequential read/write speeds than HDDs. This makes SSDs better suited for tasks that involve large amounts of data, such as video editing or gaming.

Random Read/Write Speed: SSDs are significantly faster than HDDs when it comes to random read/write operations. This makes SSDs better suited for applications that require many small data transactions, such as booting an operating system or running multiple applications at once.

Durability: SSDs are more durable than HDDs because they have no moving parts that can wear out or break. SSDs are less likely to fail due to physical shock or wear and tear.

Capacity: HDDs are typically available in larger capacities than SSDs. While SSD capacities are rapidly increasing, HDDs still offer higher storage capacities at lower prices.

Power Consumption: SSDs consume less power than HDDs, making them a better choice for laptops or other devices that run on battery power.

Price: HDDs are typically less expensive per unit of storage than SSDs. However, SSD prices have been declining rapidly, and the cost difference between the two technologies is becoming smaller.

In conclusion, SSDs are generally faster, more durable, and more energy-efficient than HDDs. However, HDDs offer higher storage capacities at a lower cost per unit of storage. The choice between an SSD and an HDD depends on the specific needs and requirements of the user.

Disadvantages of SSDs

Here are some disadvantages of Solid State Drives (SSDs):

Cost: Although the price of SSDs has come down in recent years, they are still more expensive per unit of storage than traditional Hard Disk Drives (HDDs).

Limited Write Cycles: Flash memory has a limited number of write cycles before it begins to wear out. This can limit the lifespan of an SSD, although most SSDs are designed to last for several years under normal use.

Capacity: Although the capacity of SSDs is increasing, they still have lower storage capacities compared to HDDs.

Write Speed: While SSDs are much faster than HDDs in terms of read speed, their write speed is not as fast. This can be an issue for users who need to write large amounts of data frequently.

Compatibility: Some older computer systems may not support the use of SSDs, or may require a firmware update to work with them.

Fragmentation: Because SSDs have a limited number of write cycles, frequent fragmentation of the disk can decrease their lifespan.

Wear-Leveling Mechanism

Wear leveling is a technique used in Solid State Drives (SSDs) to distribute write operations evenly across the entire flash memory, so that no single memory block wears out faster than others.

In an SSD, data is stored in blocks of flash memory. Each time a block is written to, the number of available write cycles for that block decreases. If one block is written to more frequently than others, it will wear out faster and reduce the overall lifespan of the SSD.

Wear leveling logic in an SSD is responsible for ensuring that write operations are distributed evenly across all memory blocks. This is achieved by mapping logical block addresses (LBA) to physical block addresses (PBA) in a way that spreads out write operations evenly. For example, when a block of data is written to, the SSD’s wear leveling logic may choose to write it to a different physical block than the previous time.

Wear leveling also helps to ensure that the SSD’s performance remains consistent over time. By spreading write operations evenly, wear leveling minimizes the number of blocks that are near their end of life, which can slow down the SSD.

In conclusion, wear leveling is an important technique used in SSDs to extend their lifespan and maintain consistent performance. It helps to ensure that write operations are distributed evenly across all memory blocks, so that no single block wears out faster than others.

Disk Attachment

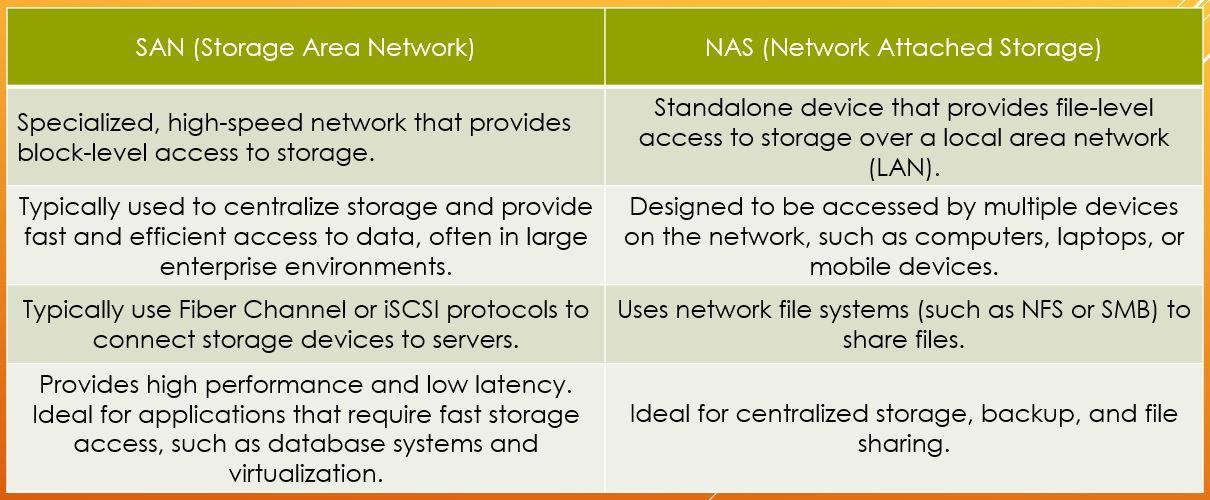

Network Attached Storage (NAS) and Host Attached Storage (HAS) are two different types of storage systems used for storing and accessing data.

Network Attached Storage (NAS)

- NAS is a standalone device that is connected to a local area network (LAN) and provides network-based storage.

- NAS is designed to be accessed by multiple devices on the network, such as computers, laptops, or mobile devices.

- NAS typically has its own IP address and uses a network file system (such as NFS or SMB) to share files.

- NAS is typically used for centralized storage and backup, as well as for file sharing and collaboration.

Host Attached Storage (HAS)

- HAS refers to storage devices that are directly attached to a host computer or server.

- HAS devices can be internal, such as a hard disk drive (HDD) or solid state drive (SSD), or external, such as a USB drive or a FireWire drive.

- HAS devices are typically faster than NAS devices due to the direct connection to the host computer.

- HAS is typically used for applications that require high performance and low latency, such as video editing or gaming.

In conclusion, NAS and HAS are different types of storage systems that are suited to different use cases. NAS is best for centralized storage, backup, and file sharing, while HAS is best for high-performance applications that require low latency.

Storage Area Network (SAN)

A Storage Area Network (SAN) is a specialized, high-speed network that provides block-level access to storage. SANs are used to centralize storage and provide fast and efficient access to data.

A SAN typically consists of a network of storage devices (such as disk arrays, tape libraries, and jukeboxes), connected to one or more servers (hosts) via a high-speed network (such as Fibre Channel or iSCSI). The storage devices are managed by a storage controller, which presents the storage to the hosts as a set of block-level devices.

The primary benefit of a SAN is the ability to separate storage from computing, allowing the storage to be managed and scaled independently. This can improve performance, availability, and scalability, as well as simplify storage management.

Another key feature of a SAN is the ability to create redundancy and backup for critical data. This can be achieved by using techniques such as disk mirroring (RAID 1) or disk striping with parity (RAID 5).

SANs are commonly used in enterprise-level computing environments, where the need for high performance, availability, and scalability is paramount. They are also commonly used for data backup and disaster recovery.

In conclusion, a Storage Area Network (SAN) is a high-speed network that provides block-level access to storage, allowing for centralized management and improved performance, availability, and scalability. SANs are used in enterprise-level computing environments and for data backup and disaster recovery.

SAN vs. NAS

Storage Arrangement

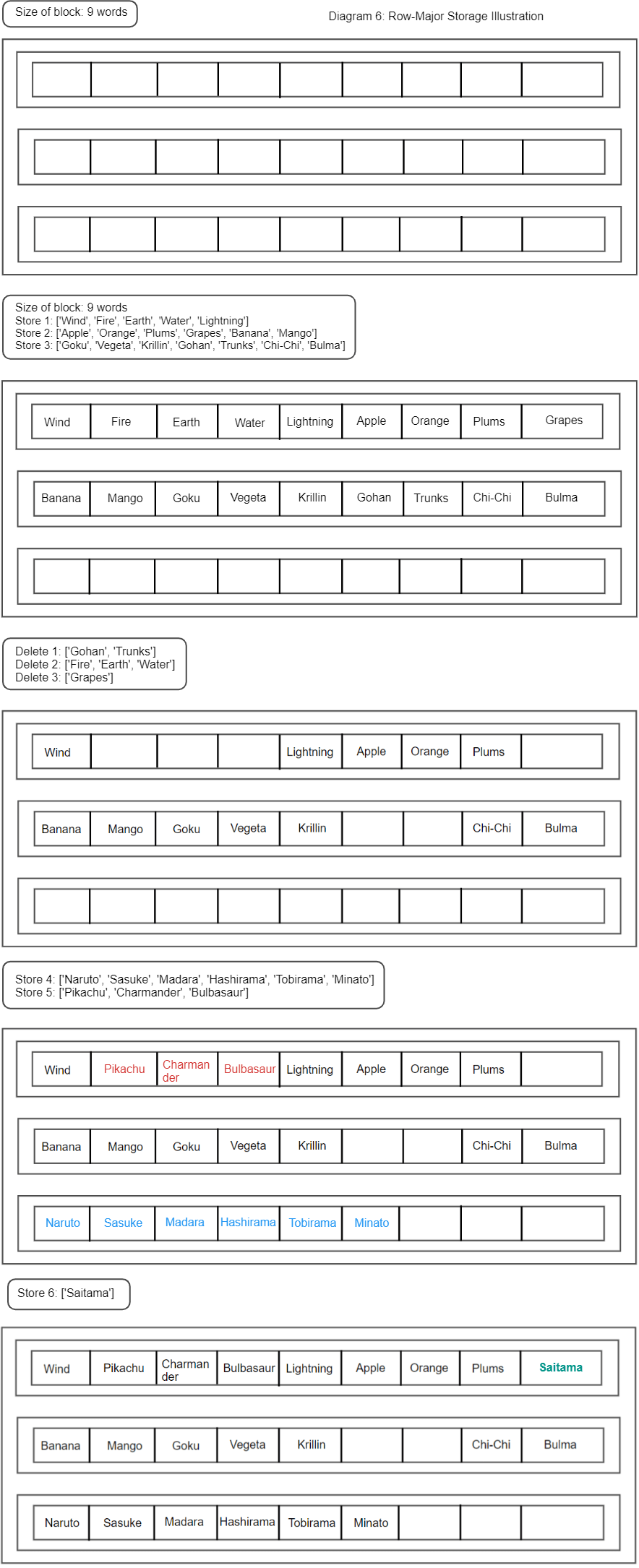

In this section, we will go over how the storage on a memory device works.

Consider the storage area as blocks of arrays.

The storage and retrieval happens horizontally from left to right. Once most of the space is filled, then the writer looks for empty spaces with sufficient size to fit the incoming data. If the writer cannot fit the complete data into the empty space, then it will split the data into multiple chunks and store the chunks in the available, even if separated, empty spaces.

This method of storing is called row-major storage.

References:

- Stallings, William (2016). Computer Organization and Architecture (Tenth Edition). Pearson.

- Julben, CC BY 3.0 <https://creativecommons.org/licenses/by/3.0>, via Wikimedia Commons

- https://www.cs.uic.edu/~jbell/CourseNotes/OperatingSystems/10_MassStorage.html